Answer:

Average time taken to process each line is

milliseconds

milliseconds

Explanation:

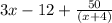

A computer takes

+ 2 milliseconds to process a certain program.

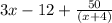

+ 2 milliseconds to process a certain program.

If the program has 4 lines of static code and x variable lines, then total lines to process will be

⇒ Total lines = (x + 4)

Now average amount of time to process each line = (Total time to process a program) ÷ (Total lines to process)

Average time =

=

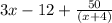

milliseconds

milliseconds

So the answer is average time taken to process each line will be

milliseconds.

milliseconds.