Answer:

Explanation:

The trigonometrical equation of the distance between the radar station and the plane is given by the Pythagorean Theorem:

Where:

- Horizontal distance of the plane with respect to the radar station, in miles.

- Horizontal distance of the plane with respect to the radar station, in miles.

- Vertical distance of the plane with respect to the radar station, in miles.

- Vertical distance of the plane with respect to the radar station, in miles.

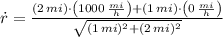

The rate at which the distance from the plane to the station is increasing is found by deriving the previous expression regarding time and replacing all known expressions: