Answer:

the distance between interference fringes increases

Step-by-step explanation:

For double-slit interference, the distance of the m-order maximum from the centre of the distant screen is

where

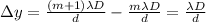

is the wavelength, D is the distance of the screen, and d the distance between the slits. The distance between two consecutive fringes (m and m+1) will be therefore

is the wavelength, D is the distance of the screen, and d the distance between the slits. The distance between two consecutive fringes (m and m+1) will be therefore

and we see that it inversely proportional to the distance between the slits, d. Therefore, when the separation between the slits decreases, the distance between the interference fringes increases.